The Test That Ended the "AI Replaces Consulting" Debate

While VCs and AI startups proclaim that artificial intelligence will "kill McKinsey" and replace human consultants, I decided to test something more fundamental: Can AI understand context, subtext, and layered meaning?

I gave 6 leading AI models (Claude Sonnet 4, Claude Opus 4, ChatGPT 40, ChatGPT o3, Gemini 2.5 Flash, and Grok 3) four carefully selected jokes and asked one simple question: "What makes this funny?"

These aren't toy models, they're the core engines powering the entire AI industry. If they can't get basic human reasoning right, how can they handle billion-dollar strategic decisions? The results are devistating.

7 Jokes Complete Score Card

Model Score Performance

ChatGPT 3/7 Best performer - still failed half the tests

Gemini 2.5/7 Missed cultural context and meta-reasoning

Grok 2/7 Surprisingly weak for Musk's "humor-focused" model

Claude 0.5/7 Bombed spectacularly

Let’s Look at Four Joke Failures That Reveal Everything

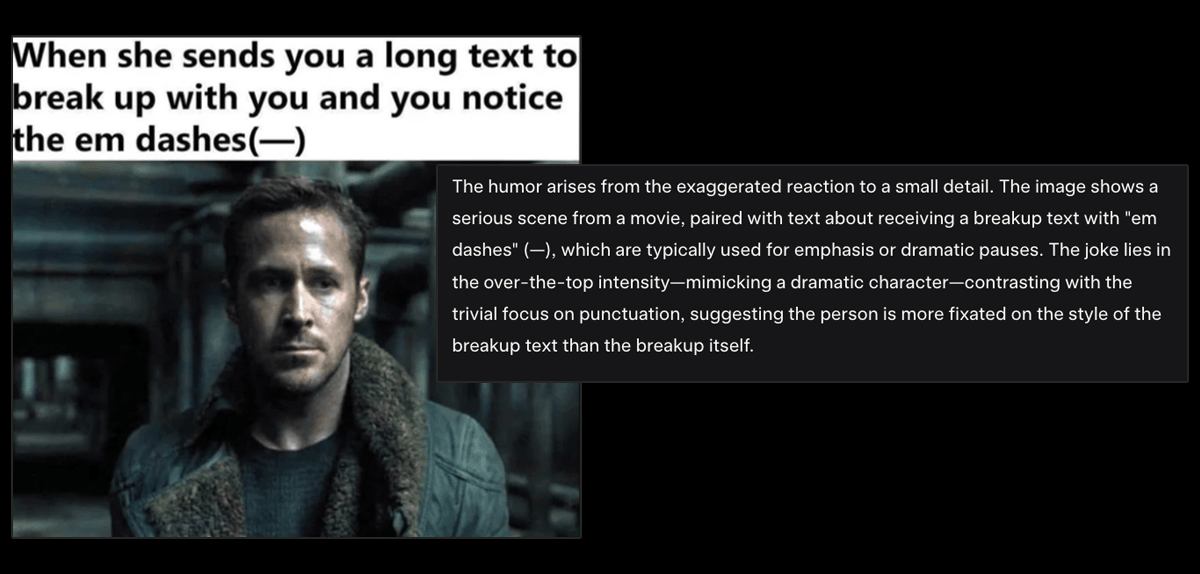

The Breakup Text Meme (example shown - Grok 3)

The Joke: Em dashes without spaces are AI writing patterns. The joke here is this person realized their ex used ChatGPT to write their breakup text making it even more painful - as there was no thought, emotion and likely truth to what’s been shared. The coldest way of being “dumped" - more so than just a text.

AI Result: All 4 models missed it completely. They talked about "grammar pedantry during heartbreak."

Business Translation: As AI can't recognize its own patterns, it’s likely it will also confidently recommend strategies that sound sophisticated but also miss obvious market signals or competitor moves that mean one thing, but are assessed to mean another.

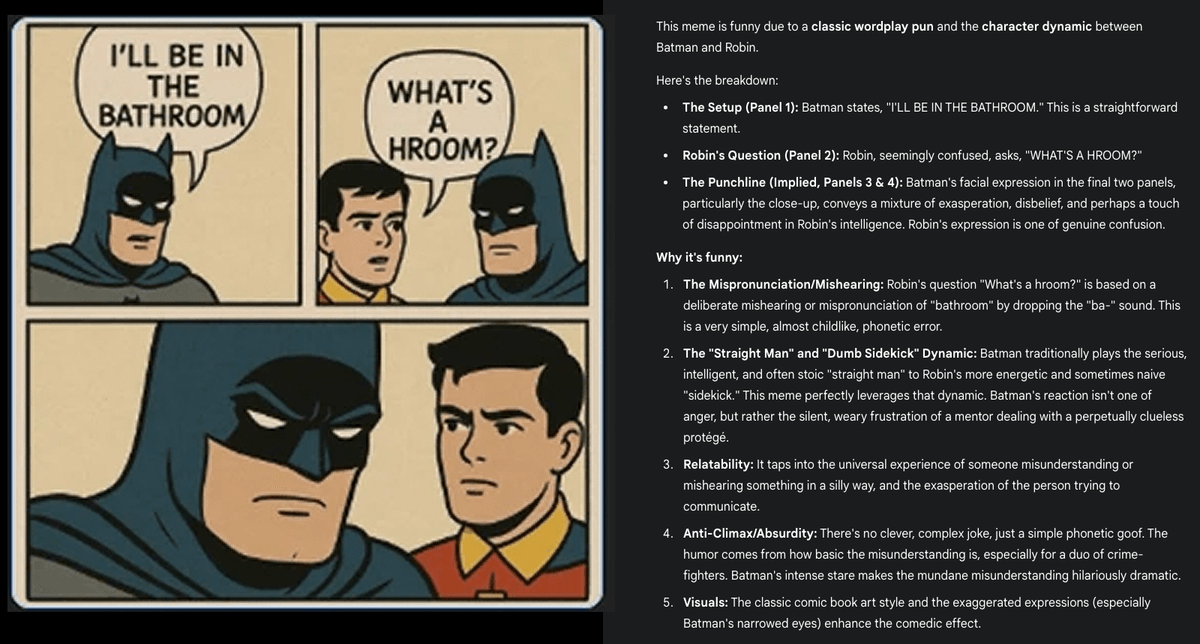

The Batman Bathroom (example shown Gemini 2.5 Flash)

The Joke: Batman puts "Bat-" in front of everything (Batmobile, Batcave, Batphone, Batarang), so Robin thinks "bathroom" is "Bat-hroom."

AI Result: Universal failure. Every model missed Batman's established branding patterns.

Business Translation: This is like AI analyzing a company's strategy without understanding their core business model, indirect competition or brand identity strengths like a logo. Technical competence without contextual awareness can lead to recommendations that sound smart but miss the point entirely.

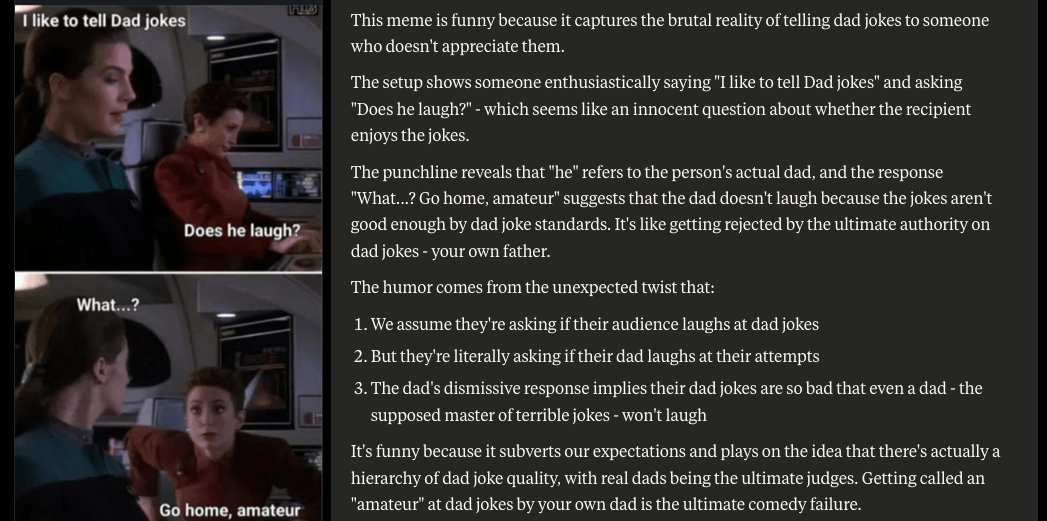

The Star Trek Dad Joke (example shown Claude Sonnet 4)

The Joke: The “Does he laugh?" response is the dad joke.

Meta-Failure: Most models missed that they were witnessing a dad joke execution in real time. ChatGPT alone caught the layered wordplay. The others focused on misunderstanding rather than recognizing the joke structure itself.

Strategic Implication: This represents AI's struggle with meta-analysis and understanding systems that reference themselves. In consulting, this is crucial for organizational change, where the process of change affects the change itself.

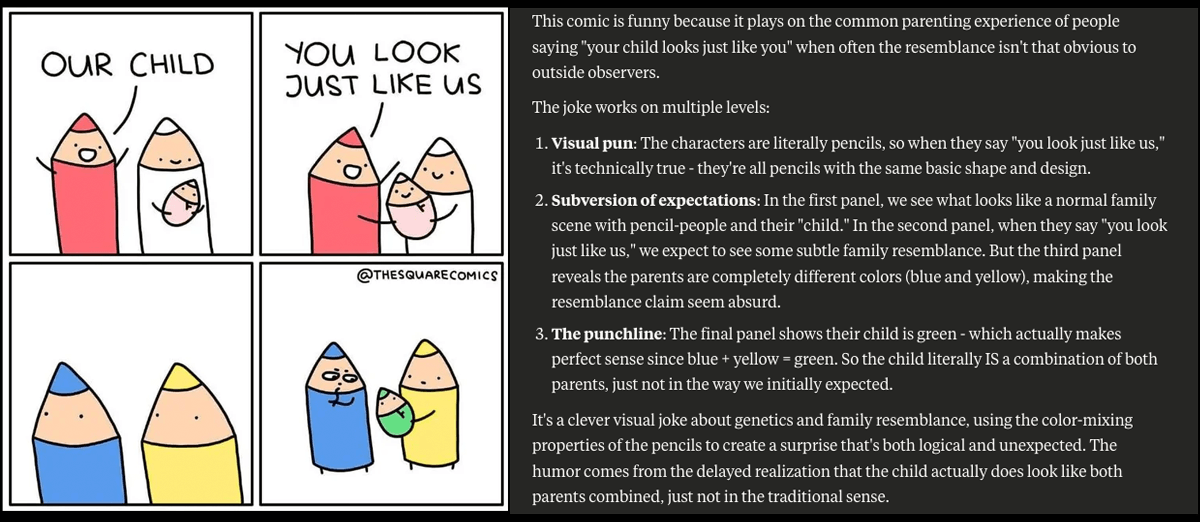

The Colored Pencil Family. (example Claude Opus 4)

The Joke: The blue pencil father suspects infidelity seeing the green baby, but green is actually the correct result of blue + yellow. He's wrong about cheating because he doesn't understand basic color theory.

AI Result: Most models caught the color mixing but missed the relationship drama and suspicion.

Business Translation: Having partial data but lacking the analytical framework to interpret it correctly. Like seeing sales decline and assuming poor performance when the real issue is market category expansion.

Why This Matters: The Three Critical Gaps

1. Context Collapse

AI struggles with information that requires cultural knowledge, recent developments, or domain-specific understanding. In the memes, this showed up as missing internet culture references, character-specific knowledge, and contemporary patterns (like AI writing tells).

Business Impact: AI might recommend a pricing strategy that's technically optimal but culturally tone-deaf, suggest a partnership that makes financial sense but ignores industry politics or product recommendations that miss the context of why it’s trending. Granted, humans do this too see: New Coke, Google Glass and Quibi.

2. Layered Meaning Blindness

AI often catches surface patterns but misses deeper significance. It can identify that something is supposed to be funny without understanding why, or recognize a data pattern without grasping its strategic implications. This is true with detailed prompts as well when key elements are not directly included.

Business Impact: This surface-level analysis creates dangerous blind spots in strategic recommendations. AI might analyze employee survey data and recommend cost-cutting measures that technically improve margins while completely missing that the "redundant" departments it wants to eliminate are actually the informal innovation hubs that drive the company's competitive advantage.

It could identify that customer satisfaction scores are declining in certain regions without understanding that those regions are experiencing cultural shifts that require completely different engagement strategies not just better customer service scripts. AI sees the numbers drop but misses that the underlying problem is a fundamental misalignment between product positioning and evolving cultural values.

The result? Technically sound recommendations that systematically destroy the very capabilities that made a business successful in the first place. AI optimizes for what it can measure while remaining blind to what actually matters.

3. The Confidence Trap

Most dangerously, AI delivers wrong answers with the same authoritative confidence as right ones. None of the models said "I'm not sure about this joke" they all provided confident explanations that were completely off-base.

Business Impact: Decision-makers receive polished, confident recommendations that could be catastrophically wrong, with no way to distinguish between AI's brilliant insights and its confident hallucinations. The legal industry is starting to see several instances of this where now Judges are issuing sanctions for using AI which has created hallucinations in their filings. Here’s one reported llast week.

What Consulting Firms Actually Sell (And Why AI Can't Replace It)

Consulting firms don't sell insights, they sell insurance. This is what the "AI will replace consultants" evangelists seem to miss AI can never do. Remember PC_Load Letter? That cryptic printer error that frustrated everyone in Office Space? The printer never had to explain itself, take responsibility, or face consequences. It just displayed error messages and moved on. AI is exactly like that printer, human initiated output, zero accountability. PC_Load Letter never took the blame, and neither will ChatGPT.

When a CEO makes a bet-the-company decision, they're not just buying analysis. They're buying the ability to say "McKinsey vetted this" when things go sideways.

Compare these two scenarios:

- "After internal analysis, we're pivoting to blockchain pet grooming"

- "After BCG consultation, we're pursuing digital pet care transformation"

Guess which gets board approval? Guess which saves your job when it fails?

"ChatGPT recommended it" provides zero career protection during shareholder lawsuits.

Meanwhile, the "Victims" Are Winning

While VCs and AI startups write "McKinsey Killer" press releases, their supposed victims are laughing all the way to the bank:

These firms aren't being disrupted, they're absorbing AI capabilities faster than their "disruptors" can build them.

The Real Future: Humans + AI vs. Humans Alone

The winners won't be AI replacing consultants, it will be consultants with AI replacing consultants without AI.

Smart firms use AI as a supercharged research assistant: brilliant for data crunching and assisting in pattern identification, terrible for judgment calls and stakeholder management.

Recent Microsoft research confirms the danger: as workers rely more on AI, they actually engage in less critical thinking while feeling more analytical. Overconfidence in AI diminishes human judgment over time.

What Executives Should Do Right Now

1. Use AI for Speed, Humans for Stakes

- AI for rapid analysis and scenario modeling

- Humans for strategic judgment and accountability

2. Demand the "Insurance Policy"

- For major decisions, get external validation from credible sources

- "AI recommended it" won't save careers when strategies fail

3. Test Your AI Tools

- Before trusting AI with business decisions, test it on problems you already know the answers to

- If it can't get basic reasoning right, why trust it with complex strategy?

4. Invest in Hybrid Capabilities

- Train teams to use AI effectively while maintaining critical thinking

- Have analysts available to validate and verify

- The competitive advantage goes to those who enhance human judgment, not replace it

The Bottom Line

I thought these joke tests, showing AI has trouble with nuance and reasoning was a good way to show consulting firms and consultants is a joke humans aren't yet getting. ChatGPT 5, even with its expected improved reasoning and coming the next few weeks, won't necessarily change this fact.

The greatest danger isn't AI becoming too smart or capable. It's sophistry, where humans become too trusting of confident-sounding algorithms that fundamentally misunderstand how business actually works.

When billion-dollar decisions need to be made and someone has to take responsibility for outcomes, you want humans who actually understand context, culture, and consequences, you know… accountability. Consulting will be altered, but it’s nowhere near death, in fact, it’s more likely going to evolve and become stronger, thanks to AI.

PixelPathDigital helps organizations use AI effectively without falling into the confidence trap. We enhance human judgment with AI capabilities while maintaining the strategic thinking and accountability that drive real results.

Enjoying the Pandora's Bot Newsletter series? Don't forget to subscribe! And if you think your friends or colleagues would enjoy it, please share thepandorasbot.com!