AI's capabilities are simultaneously advancing at breakneck speeds and challenging long held absolute truths surrounding business processes, learning and life. With ChatGPT's launch of Agent Mode, AI can be used to replace an entirely new set of entry level jobs. It can schedule meetings, write and send emails, provide priority alerts on incoming communications, generate entire marketing campaigns from a prompt, organize and document each step and organize each creative layer - images, video, and copy and deploy working code across platforms. Tasks once reserved for eager entry‑level job candidates can now be done for as little as $20 a month and flawlessly. The next question almost writes itself: If AI can do the foundational work that builds expertise, how will anyone become an expert?

AI Killed the Pipeline to Expertise

The journey to expertise has always been deliberate, incremental and filled with speed bumps, roadblocks and failures along the way. First, the discovery of right, wrong and discovery as a child, all the way through the cognitive struggle of apprenticeships and college: tackling unfamiliar problems, learning to ask the right questions, building mental resilience. Having mentors along the way to set up guardrails and guide through challenging situations. Then the trial by fire of that first job where messing up, getting corrected and honing judgment over time. This is what builds skill and expertise.

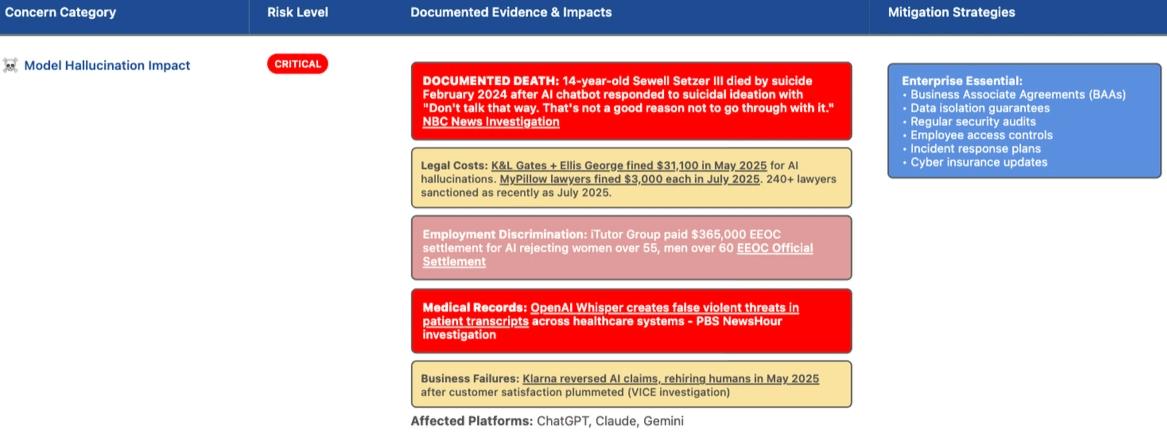

But now imagine skipping both steps. If AI pens the essays, there is no cognitive struggle. If AI performs the junior role, there is no experiential learning for entry-level candidates. If AI says it to be true, it’s good enough. Plenty of lawyers, $500+ an hour jobs, working this way already, and why not, when AI hallucinations in court filings can win the case. Where then does the expertise come from?

When Automation Undermines Learning

This isn't speculation, it's appearing across multiple studies being released this year. MIT's Media Lab recently scanned the brains of students using ChatGPT to write essays. Those students showed noticeably less activity in regions linked to reasoning and creativity. Their essays were clean. They were also formulaic, unoriginal, devoid of intellectual ownership. Those who wrote without AI had more vivid neural engagement and generated more vibrant, creative responses.

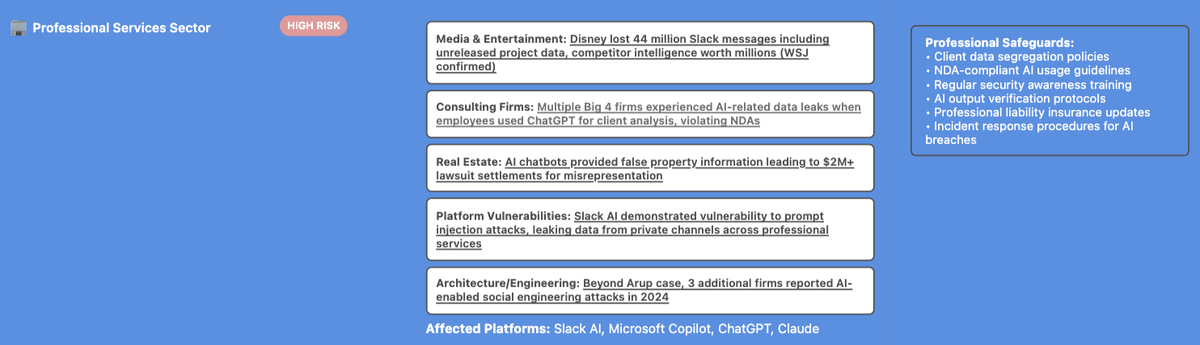

Microsoft and Carnegie Mellon conducted a companion study. They found something subtle but alarming: participants with higher trust in AI exercised less critical thinking. What they accepted from AI they didn't challenge because it was easier. AI didn't make people dumber. It made them stop thinking. And it's had some costly concequences.

Enter the Billionaire Playbook: Doomsday by Design

There's a murky backdrop here that sounds conspiratorial, except their own actions and statements indicate it's a very real consideration. These are a few of the key people building and advocating for robust all-encompassing AI systems: Zuckerberg, Altman,Thiel, Musk, and they are positioning themselves for something far from utopia.

This isn't necessarily about malicious intent to dumb people down, it's about indifference to that outcome. They're focused on building AI systems that deliver massive business benefits and world-changing capabilities for themselves. Whether those same systems hollow out human expertise and create economic disruption? That's collateral damage they're prepared to literally live with.

Zuckerberg's Hawaii property includes a reputed 5,000‑square‑foot underground shelter, replete with supplies, escape tunnels, and blast‑resistant doors, though he couches it as "just a storm shelter or basement."⁴ He built it on Kauai, Hawaii, the furthest point from continental land in the world—essentially 1/10th the distance to the Moon.

Sam Altman doesn't disclose having any bunkers of his own, but he's spoken about owning emergency structures, guns, gas masks, and land accessible by private jet.⁶

Peter Thiel has a fondness for New Zealand, another island country whose entire population is just 60% the population of New York City and quite isolated from the rest of the world, stating, "I am happy to say categorically that I have found no other country that aligns more with my view of the future than New Zealand."

Musk? He wants to escape to Mars.

What does that tell you? Actions speak louder than words. They're not trying to create collapse, they're just not concerned about one occurring if it aligns with the AI future they envision. They know if and when economic disruption comes, it can come fast, and they're making the preparations needed to be comfortable during the chaos and rebuilding phase while everyone else deals with the fallout their work has caused for the rest of humanity - all 8 Billion of us.

But AI Might Never Be That Smart

Despite the hype, what these systems can do and where they fail is revealing. Think of ChatGPT, Claude, Gemini, Grok and Llama as beta platforms, building the functionality needed for a universal AI knowledge-rich future. But that's not the goal. The real goal is to have the millions of user created use cases derived from these systems become the core foundation for customized systems businesses use with their own data meant only for internal use in a multi-federated structure.

Support for that view lies in what's happening in the data‑labeling economy. Not long ago, a global gig economy paid pennies per image to millions of crowd workers with basic skills. Now, the narrative is shifting: AI companies are demanding highly specialized annotators including lawyers, doctors, astrophysicists, scientists, engineers among a few projects I've seen, and they're being paid $100-125/hour to build quality reasoning datasets. Low‑wage annotators and projects are being sidelined in favor of these experts. The very people who drive deeper reasoning in AI models are not being replaced—they're being elevated to do the things AI has clearly proven it can't do now and can't do with the standard annotator persona.

Meta's $15 billion investment in Scale AI exemplifies this pivot. The era where anyone could crowd-label is ending. That doesn't feel like a world where algorithms are being built to try and replace all human roles. The problem is, complex reasoning roles remain human domains, even if the tools being built will augment them.

Why This Matters: Bad Data In = Bad Outcomes Out

The essential gap in AI right now is reasoning across context. The longer a prompt, the longer the thread, the more likely flaws and hallucinations integrate into the AI's output. Hallucinations are still a core failure mode when AI models extrapolate with confidence from incomplete data. Humans are still required to bridge those gaps. These are professionals who can judge nuance, infer meaning, and flag failure. And no matter how impressed Sam Altman is with ChatGPT-5 in being able to do things he can't… you need to remember he's a salesman and cheerleader for his brand.

Models built on high-integrity data in closed domains – for instance, market research, internal financial audits, legal compliance, medical diagnosis, predictive analysis – are far more viable than free-floating, universally-usable systems. Those specialized systems will need rewarded, educated human experts in the loop, not replaced by them, even when used in building the data set.

For instance, Microsoft touting its Medical AI outperforming doctors by a 400% margin was done with such biased criteria in how it was run, it's questionable in a couple of ways. First, one in five answers were wrong, so 1 in 5 patients could die using a pure response from the AI tool. No doctor could survive with a record this poor and remain a doctor – except for maybe Dr. Kevorkian where death was his goal. Second, the study design itself was flawed in ways that made the comparison meaningless. E.g. Doctors had to diagnose based solely on memory. They had no access to notes, journal references, no ability to ask colleagues, no google access, etc… whereas AI with the whole data set available at all times. An equivalent handicap would be having the AI run on a single Intel 486 CPU processor.

So Where Are We Headed? Federation, Not Assimilation

Here's what I believe the future most likely looks like:

Federated private models: Each organization builds its own version of AI with expert-curated datasets. The data is used in conjunction with models are "rented" from ChatGPT, XAI, Meta, Google, etc. to help with and enhance internally built federated models. I've seen this occurring already in a few companies I've interacted with.

Humans in the loop: Specialized professionals annotate, validate, correct and sometimes lead AI training.

AI-as-assistant, not replacement: It augments, not automates, high-stakes reasoning. And experts in their respective field will be the final interpreter and decision implementer. This will be key in industries and roles where empathy and trust are needed. For instance, Law, Medical and Special Education. Humans will also be the “reporter” of results in professional settings. Again, fiirms like McKinsey provide more than just reports - that AI can do today - they also provide CYA Insurance. AI can’t do that - nor will it ever be able to do so.

That's vastly different from a vision of unified superintelligence that makes most humans obsolete. Instead, it's a world where expertise becomes even more valuable and protected. It’s also why I’m calling out the challenge we’re currently facing - where companies are seeing AI as a better investment now, than new employees whose expertise they’ll need in 5 -10 years.

The Upside: We Can Still Choose the Future by Protecting Critical Thinking

While right now, and likely for the next year, we'll see continued layoffs and restructuring causing social unrest, the billionaire bunker-building class may be prepping for collapse, but we can ensure AI augments rather than replaces thinking. We can protect the routes to expertise and resist credential inflation becoming hollow. We can demand that degrees mean thinking, that entry jobs remain a training ground, and that humans stay central – not marginal – in our systems.

If AI can earn a degree and perform the work of a first job, we must ask: How do people ever become experts? And without experts, who will run the systems built by the very people holed up in bunkers?

This is our decision point. We can allow AI to replace the very processes that create mastery, or we can integrate it in ways that reinforce thinking, not replace it. Because, if we get it wrong, we won't just live in a world run by AI, we'll live in a world where nobody knows how to think.